Although crawl budget may sound like technical jargon, it’s a basic concept in SEO. It determines the number of pages that search engine bots, such as Google, will crawl from your website in a certain period. Your site’s visibility may be reduced if your key pages aren’t indexed because they cannot appear in search results. This might not be an issue for smaller websites, but controlling the crawl budget is essential for larger ones.

Optimizing your content so search engines focus on your most helpful content may improve indexing and increase ranking. Let’s examine the crawl budget and how it affects your search engine optimization efforts.

1. What Is a Crawl Budget?

Your crawl budget is the number of pages on your website that search engine bots, such as Google, crawl in a certain amount of time. These bots are in control of indexing your website so that it appears in search engine results. Consider it similar to a daily stipend: you are allotted specific resources for crawling your pages.

Although Google does not officially describe the crawl budget, it is commonly believed to be a balance between crawl demand and crawl rate limit. Crawl demand is determined by how much Google wants to index your content based on relevancy. On the other hand, the crawl rate limit sets how frequently Google’s bots can browse your site without affecting its functionality.

Important pages may not appear in search results if Googlebot cannot crawl them all because of a limited crawl budget. Therefore, it is essential to optimize your crawl budget properly.

Why Crawl Budget is Important for SEO

Crawl budget affects your website’s search engine visibility. When bots crawl your website, they index the content, allowing visitors to access it through search results. Important pages will not rank if they are not crawled.

Because bots can crawl the entire website within their allotted budget, a crawl budget might not be a problem for smaller websites. However, controlling the crawl budget becomes crucial for larger websites with thousands of pages. Imagine having excellent product pages or blog posts hidden from search engines because bots can’t access them.

Search engines will prioritize crawling your valuable content if you optimize your crawl budget. It results in better indexing, higher rankings, and more organic visitors. The issues of crawling budget may easily be addressed if we use indexplease for getting the pages index.

2. How to Check Your Crawl Budget Using Google Search Console

Google Search Console (GSC) is one of the simplest methods to check your crawl budget. This free tool provides information on how Google indexes and crawls your website.

Steps to Check Crawl Stats in Google Search Console:

Open your GSC account and log in: Go to the website’s property you want to inspect.

Visit the report on crawl statistics: You can find it in the left-hand menu’s “Settings” section.

Analyze Crawl Activity: The report displays the types of files crawled, average response time, and total crawl requests.

Furthermore, the Crawl Stats Report indicates which parts of your website are most frequently crawled. Identify areas where bots might be wasting resources. Check your crawl statistics to stay informed about how Google interacts with your website. This knowledge helps in decision-making to maximize your crawl budget.

3. Optimizing Crawl Budget for Efficient Indexing

If you optimize your crawl budget, search engine bots focus on crawling your most valuable content. The following strategies will help you maximize your crawl budget:

Improve Website Speed

Improving a website’s performance is essential for crawl budget optimization. Minify CSS and JavaScript files, enable browser caching and reduce the size of images. Use a Content Delivery Network (CDN) to reduce latency and ensure the hosting server is reliable. Bots can crawl a website more effectively if it is faster.

Fix Broken Links

Broken links impact user experience and waste crucial crawl budget. Sometimes, search engine bots reduce efficiency by wasting time trying to access pages that don’t exist. Use tools like Ahrefs or Screaming Frog regularly to find and repair broken links on your website. Quickly remove or redirect them for improved performance.

Use Robots.txt Wisely

Robots.txt is a useful tool for controlling your crawl budget. Use it to prevent search engines from finding low-value pages such as staging areas, admin panels, or duplicate content. This ensures bots concentrate on your most important pages, increasing crawl efficiency and search engine indexing.

Update Your Sitemap

Update the sitemap frequently and ensure only high-priority pages are included in your XML sitemap. Eliminate outdated or unnecessary URLs to help search engines focus on your finest content. A well-maintained sitemap increases crawl efficiency by directing bots to the important pages and helps your SEO strategy.

Eliminate Duplicate Content

Duplicate content wastes crawl budget and confuses search engines. Use canonical tags to indicate which version of a page is preferable and combine related information into a single, thorough post. Audit your website frequently to find and fix duplicate pages to increase search engine ranks and crawl efficiency.

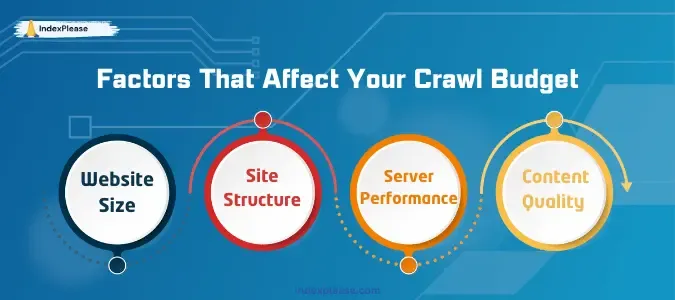

4. Factors That Affect Your Crawl Budget

The crawl budget isn’t fixed, and several factors influence it. Understanding these elements can help you better manage it.

i) Website Size

Because more pages need to be indexed, larger websites require a larger crawl budget. Use robots.txt or noindex tags to prevent low-value or needless sections, prioritizing crucial pages. This guarantees that bots effectively crawl through and concentrate on your most important content.

ii) Site Structure

A well-structured website directs bots to key web pages, increasing crawl efficiency. Keep the content within three clicks of the homepage and strategically use internal links. This reduces confusion and guarantees that bots can find and index your priority pages.

iii) Server Performance

A reliable and fast server ensures efficient crawling. If your server reacts slowly, bots can decrease or cease crawling entirely. To maintain steady performance and ensure bots efficiently access and index your sites, invest in high-quality hosting, adjust server settings, and monitor uptime.

iv) Content Quality

Search engines like valuable, high-quality information. Duplicate or low-value pages reduce your site’s authority and waste crawl budget. To increase indexing and general SEO performance, regularly assess content, strengthen weak pages, and prioritize original, appealing content.

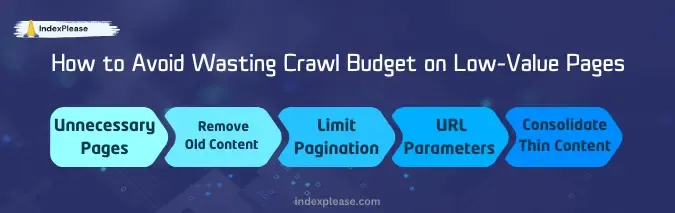

5. How to Avoid Wasting Crawl Budget on Low-Value Pages

Not every page on your site deserves crawling. Concentrating your crawl budget on high-value pages can guarantee better indexing and ranking.

i) Block Unnecessary Pages

Use the robots.txt file to stop search engine bots from wasting the crawl budget on low-value pages. Limit access to pages like internal search results, tag archives, and login screens. Indexplease is a great tool that lets you auto or manually submit URLs of selected pages for fast indexing. This guarantees that bots concentrate on crawling and indexing valuable, pertinent information.

ii) Remove Old Content

Outdated pages might harm your website’s overall quality. Conduct routine content audits to find and eliminate pages that are no longer useful. Remove unnecessary content to increase crawl efficiency and improve your site’s user experience.

iii) Limit Pagination

Over-paging burdens your crawl budget, particularly for big e-commerce sites. To help bots navigate paginated information, use the rel=“prev” and rel=“next” tags. This guarantees improved indexing of important pages and helps search engines understand the website structure.

iv) Minimize URL Parameters

Reducing URL parameters reduces crawl costs and helps avoid duplicate content problems. Use tools such as Google Search Console to configure parameter handling. Alternatively, rewrite dynamic URLs into cleaner, static formats to retain proper indexing of your content, which increases crawl efficiency and user experience.

v) Audit and Consolidate Thin Content

Thin content wastes the crawl budget and provides little value to users or search engines. Conduct routine site audits to find such pages. To increase relevance and SEO performance, combine relevant thin pages into comprehensive resources or boost their quality with insightful, user-focused content.

Conclusion

A crucial but frequently neglected component of SEO is the crawl budget. You can ensure that search engines index the most important pages on your website by using Indexplease. It solves your indexing problems and boosts your chances of a higher ranking in Google Search.

Every action can help you maximize your crawl budget, from increasing site performance to removing low-value content. Remember that a well-optimized crawl budget improves user experience, and increases site traffic in addition to helping search engines.

Therefore, examine your website and take proactive measures to control your crawl budget. The result will make the work rewarding.

FAQs

- What is crawl budget, and why does it matter for SEO?

The crawl budget is the number of pages that search engine bots can crawl on your website in a certain amount of time. It’s important since it affects your site’s visibility and rankings by deciding which pages are indexed and appear in search results.

- How can I check my crawl budget?

The Crawl Stats Report in Google Search Console allows you to control your crawl budget. It provides information about the total number of crawl requests, response times, and the sections of your website that are being crawled.

- What factors influence my website’s crawl budget?

Website size, server performance, site structure, content quality, and update frequency are some factors that impact the crawl budget. A well-optimized website increases the crawl efficiency is increased by a well-optimized website.

- How can I optimize my crawl budget?

Optimize your crawl budget, speed up your website, fix broken links, update your sitemap, utilize robots.txt, and remove thin or duplicate content to guide bots to key pages.

- How can I avoid wasting the crawl budget on low-value pages?

To direct bots to high-value resources, use robots.txt to block useless pages, manage pagination, limit URL parameters, remove outdated content, and combine thin content.