Optimizing JavaScript Content for Search Indexing

JavaScript codes are of the foremost importance in the web development world, as they allow the making of interactive websites. These websites can include single-page applications (SPAs) and other customized elements. While this advancement improves users’ overall experience, it also brings new complications for search engine optimization (SEO). Unlike static HTML, JavaScript utilizes rendering from the client or server sides, making it difficult for most search engines to crawl and index the information.

Users get to enjoy robust and engaging designs, but search engines heavily struggle with rendering JavaScript-powered pages. It must be said, though, that developments such as Google’s Web Rendering Service (WRS) have given much more ease to search engines when dealing with JavaScript pages. However, these search engines still require content to be optimized for it to be indexed accurately. Knowing the majority of the details surrounding JavaScript search indexing can enable your site to tap into a bigger audience and improve its ranking on various search engines.

What Is JavaScript Indexing?

Search engines such as Google utilize JavaScript indexing when they analyze and extract relevant content embedded in JavaScript to display on their search results. Bots can easily navigate through standard HTML websites, however, JavaScript content is a bit different. Javascript requires rendering, which is defined as the process where a browser or a server executes the JavaScript code to create the final content that is viewable. For example, modules developed using these frameworks, like React or Angular, are single page applications that are highly dependent on JavaScript for content fetching. If search engines cannot render this type of content, critical portions of your website would likely go unseen, making the indexing worse and the search visibility lower than it should be.

Why JavaScript Indexing Matters for SEO

It cannot be overstated how critical proper JavaScript indexing is to a website’s usability and visibility.

- Visibility: You need to ensure that the search engines can access all fundamental components of the web page such as text, images, videos, and well-defined data. When searching results, poor indexing on JavaScript issues may lead to incomplete search results.

- Competitive Advantage: Websites using JavaScript frameworks and optimized for effective indexing have a competitive edge, especially with sites that are more mechanically challenged.

- User Experience: Many advanced websites use JavaScript frameworks to provide a better user experience. If such tailored content gets ignored during indexing, relevant search queries may not put your website on their radar.

Challenges in JavaScript Search Indexing

While advancements like Google’s Web Rendering Service have improved JavaScript handling, several challenges persist:

- Delayed Crawling: JavaScript rendering adds an extra step to the indexing process, potentially delaying when your content is crawled.

- Rendering Limitations: Some scripts may not execute properly during rendering, leaving gaps in the indexed content.

- Dependency on External Resources: Slow-loading APIs or scripts can hinder complete content delivery, reducing SEO effectiveness.

- Fragmented Rendering Across Search Engines: While Google has sophisticated JavaScript capabilities, smaller search engines may struggle, leading to inconsistent visibility across platforms.

How Search Engines Render JavaScript

Understanding how search engines process JavaScript can help you address potential pitfalls:

- Crawling: Bots identify and queue the URLs for crawling.

- Rendering: The browser or WRS executes JavaScript, generating the final HTML document.

- Indexing: Search engines analyze the rendered content to extract meaningful information for their database.

For JavaScript-heavy websites, rendering is the bottleneck. Google’s WRS requires resources to fetch, execute, and interpret JavaScript, so ensuring efficiency is critical.

How to Check if Google Is Indexing JavaScript Content Correctly

To confirm Google is correctly indexing your JavaScript content:

- Google Search Console: Use the URL Inspection Tool to compare raw HTML and rendered HTML. Any missing elements could signal indexing issues.

- Fetch as Google: Discover how Googlebot renders content and check user facing pages against bot facing pages to better evaluate discrepancies.

- Make sure to check the visibility relationships of JavaScript files and DOM on your website using the “Elements” tab in Chrome DevTools.

These tools allow you to see how a search engine would view your site and solve any problems quickly.

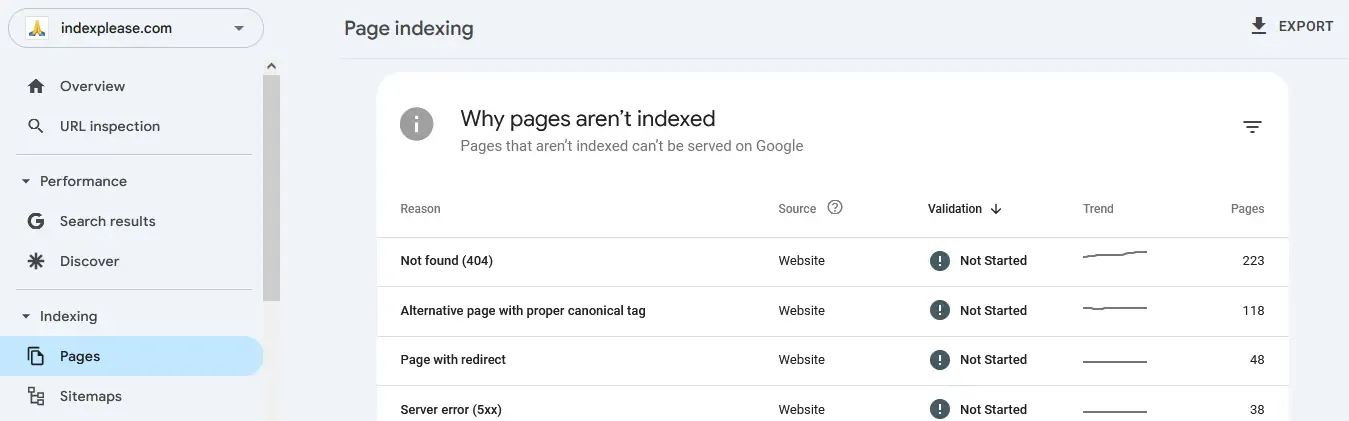

Testing JavaScript with Google Search Console

Google Search Console offers insights into your website’s indexing status:

- URL Inspection Tool: Submit a URL to see the rendered version of the page. Pay attention to any warnings or errors regarding resource loading.

- Coverage Report: Identify pages with errors, exclusions, or partial indexing due to rendering issues.

- Mobile Usability Report: Ensure mobile-friendly pages, as JavaScript errors can affect responsive design.

Using Fetch as a Google Tool

This tool simulates how Googlebot fetches and renders your pages:

- Submit a URL and request indexing.

- Review the “Render” option to identify missing or malformed elements in the displayed content.

- Resolve missing tags and incorrectly implemented JavaScript.

This step ensures that search engines and your users see the same content, effectively synchronizing user engagement and SEO.

Page Speed Insights and Its Role

Your page speed affects your ranking, and that is another consequence of JavaScript. Plus, large scripts, a lot of HTTP queries, or simply sloppily written code can negatively influence the overall experience, decrease the indexing, and more importantly, the ranking your site receives as a consequence.

Essential Strategies:

- Compress JavaScript files using Gzip.

- Use asynchronous loading for non-critical scripts.

- Employ lazy loading for images and videos.

A faster website improves crawl efficiency and enhances user satisfaction.

Best Practices for Optimizing JavaScript for Search Engines

To ensure seamless indexing:

- Minimization: Deleting unneeded characters and comments to decrease the file size.

- Server Side Rendering: Rendering content prior for bots to view. This ensures that the bot receives an already completed HTML page instead of a skeleton.

- Creating Structured Data: Using schema.org to explain the semantics of your content to search engines.

- Supervision of External Scripts: Decrease dependency on external JavaScript files that add extra delay.

Client-Side Rendering Versus Server-Side Rendering

Server Side Rendering: Sending a fully rendered web page from the server to the user’s browser.

Client-Side Rendering (CSR): Depend on the browser to run the JavaScript, which can postpone content loading for crawlers.

Balancing SSR and CSR based on your site’s requirements ensures optimal performance and indexing.

Dynamic Rendering: A Solution for Complex Websites

Obstacles created by JavaScript dependent websites and those with sophisticated functionality that search engine bots find hard to access can be efficiently worked around using Dynamic Rendering. This technique serves bots a pre-rendered HTML version of your site, so they can parse and index the content seamlessly. At the same time, users are served full dynamic content, enabling engagement and interactivity. Dynamic rendering is the bridge between search engine expectation and user expectation. Hence, every site can be search engine optimized and user optimized at the same time.

Recognized user agents (like search bots) are detected, and the important content of your site is captured in the form of static snapshots. These snapshots will contain all the SEO critical elements like the metadata, structured data, and content that is visible, thus ensuring proper indexing and retaining the functionality. Dynamic rendering is efficiently enabled on sites that use client-side rendering frameworks like React, Angular, or Vue.js, ensuring keywords are not neglected by powerful website crawlers.

However, its implementation requires regular audits to ensure that both the pre-rendered snapshots and dynamic versions of your site are accurate, up-to-date, and functioning without errors.

How IndexPlease Makes the Complex Task of JS Content Indexing Easier

Every engine optimization to JavaScript-heavy websites is a pain individually because of the latency in crawling, rendering issues, and even fragmentation in indexing. This is where IndexPlease comes into the picture.

IndexPlease provides assistance to the developers and site owners in making sure that their JavaScript-loaded web pages are indexed and crawled appropriately. Developers using React, Angular, or Vue.js frameworks will appreciate how IndexPlease approaches the challenge by:

✅ Ensuring Proper Rendering Of JavaScript – Assists search engine bots in correctly interpreting content that loads dynamically.

✅ Instant Indexing – Your JavaScript POPs and content is indexed easily without endless waiting for crawl cycles.

✅ Performance Improvement – Identify and fix problems that may prevent search engine bots from valuable site components.

With IndexPlease, you are guaranteed search visibility while still delivering remarkable user experiences that make a difference in today’s competitive digital marketing. This gives you the edge in JavaScript SEO for the rest of your competitors in the industry.

Conclusion

JavaScript is a double-edged sword. Search engines are getting better at working with JS, but website owners and developers need to constantly adapt to ensure their visibility and performance metrics are within ranges that they are wanting to achieve. Leveraging everything from Google Search Console for site performance and indexing issues to server-side rendering, structured data, and effective script usage, along with performance audits will ensure that an organization stays on top of the metrics that are important to them. Even using advanced strategies like dynamic rendering make it easier for bots to access the content ensuring that users and bots both have easy access to it. Balanced together, these results help attain higher rankings while also providing a satisfactory user experience in the competitive digital ecosystem.

FAQs

- How does JavaScript impact SEO?

JavaScript can create dynamic user experiences but requires optimization to ensure search engines correctly index content.

- How can I identify JavaScript indexing issues?

Use Google Search Console, Fetch as Google, and Chrome DevTools to verify the raw and rendered content along with testing the rendering.

- What is the most effective method of improving the SEO aspects of JavaScript?

Minify the code to make it compact, adopt server-side rendering, and eliminate the dependency on superior scripts that lower the performance and indexing of a website.

- Is there always a necessity for server-side rendering?

No, it depends on your site’s complexity. For content-heavy or interactive sites, SSR often provides significant SEO benefits.

- How do third-party scripts affect indexing?

They can introduce delays or conflicts, so it’s important to monitor their performance and relevance.

- What is dynamic rendering?

Dynamic rendering serves pre-rendered HTML to search bots while delivering regular JavaScript content to users, improving SEO compatibility.