How to get indexed on Google

Simply hitting “Publish” is no longer enough. Google’s indexing systems have become more selective, more algorithmic and far less predictable, especially if you’re a small site, a new blog or just not seen as “worth crawling right now.”

And this isn’t a conspiracy, it’s algorithmic triage. Google is prioritizing content that’s fast, structured, relevant and backed by strong internal signals. Everything else? It might get crawled or ignored.

That’s why this guide exists. Whether you’re a solo blogger or running a content-driven SaaS site, we’ll break down:

- how Google indexing actually works,

- why some pages just never show up,

- and how to fix it, either manually or at scale.

And if you’re managing more than a few URLs a week, tools like IndexPlease can help you automate submissions, monitor indexing status and retry failed pages, without living in Search Console.

Let’s make sure your content doesn’t just exist but actually gets found.

Why Indexing Is Still a Pain Point

For all the talk about AI overviews, real-time content delivery and smarter crawling, indexing in 2025 still feels like a black box.

- You publish.

- You wait.

- Sometimes it shows up in Google Search.

- Sometimes, it never does.

Here’s why this still happens:

Google’s Index Isn’t Infinite

Google doesn’t index every page on the web, not even close. It uses signals like content quality, freshness, site reputation and internal links to decide what’s worth indexing.

If your page is:

- Too similar to others (duplicate content)

- Light on value (thin content)

- Hidden behind poor navigation

- Slow or bloated

It may never get in.

Crawl Budget Is Real, Especially for Smaller Sites

Google still allocates limited crawl resources per domain. That means:

- Large or inactive sites get less attention

- New blogs take longer to be discovered

- Frequent updates don’t always trigger re-crawls

AI-Fueled Search Prioritizes Clarity and Structure

Google’s AI systems now pull summaries, snippets and contextual answers from its most index-worthy sources. If your site lacks clear hierarchy, structured data or crawl-friendly architecture, you’ll likely be skipped in favor of better-optimized pages.

In short: indexing isn’t guaranteed. And for most site owners, that’s a serious SEO blind spot.

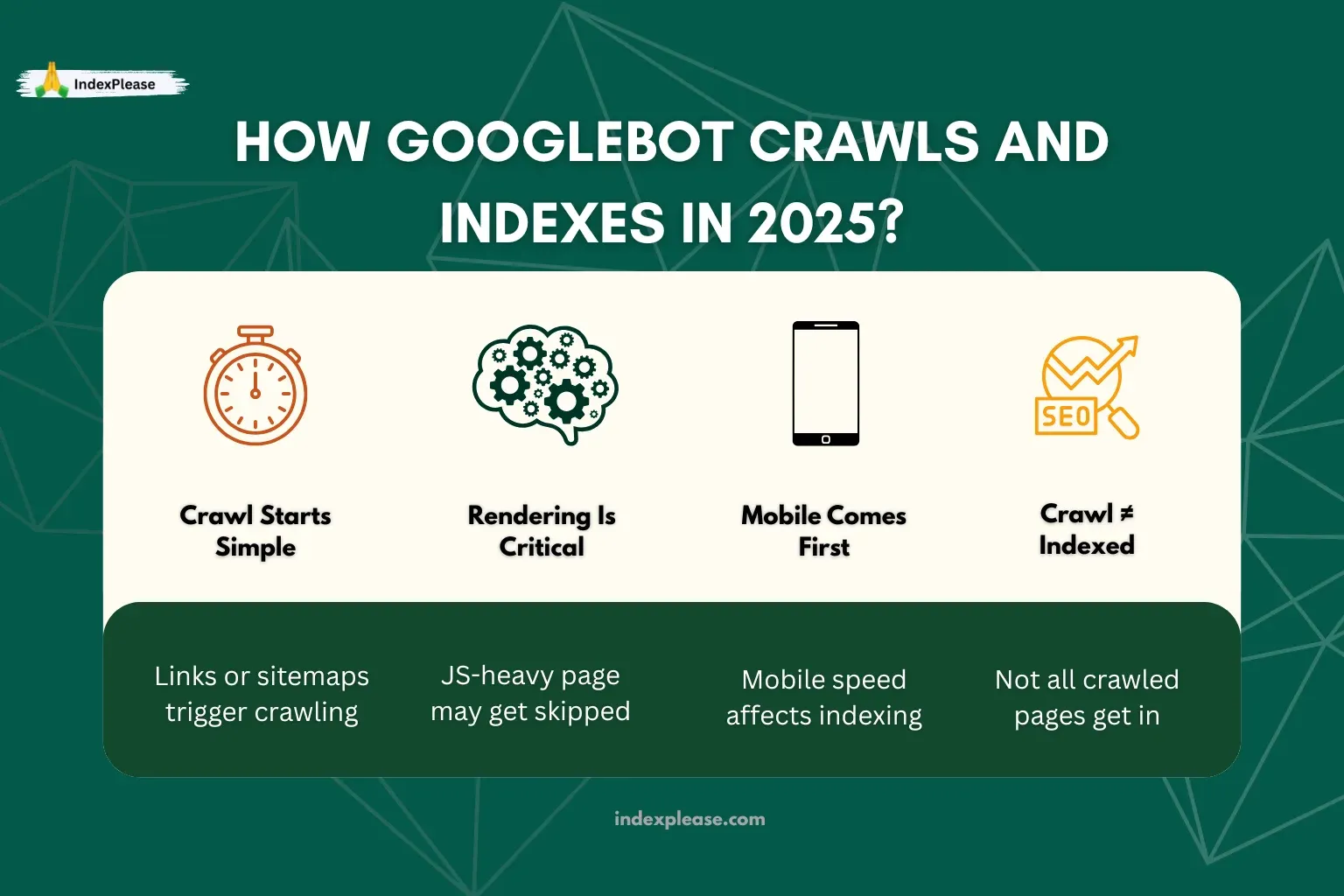

How Googlebot Crawls and Indexes in 2025

Understanding Google indexing means first understanding how Googlebot works today and how it decides which pages make the cut.

Spoiler: it’s more layered and selective than ever.

Googlebot’s Crawl Journey

- Discovery

- Googlebot finds your page via a sitemap, backlink, internal link or manual submission.

- Crawl & Fetch

- It requests the page and loads the HTML.

- If your content relies on heavy JavaScript, Googlebot queues it for rendering later.

- Render & Analyze

- It processes layout, text and code. If render-blocking scripts or errors exist, key content may never be seen.

- Indexing Decision

- Based on page quality, structure, canonical signals and user value, Google may index it… or ignore it.

Mobile-First + JS-Heavy = High Stakes

Mobile-first indexing is now default. Pages must be fast and usable on mobile to qualify.

Client-side rendering (like React or Vue) without proper hydration can make content invisible to Googlebot.

LCP/CLS/INP metrics (Core Web Vitals) still play a role, bloated or slow pages get deprioritized.

Crawl Frequency ≠ Indexing

Just because your page was crawled doesn’t mean it’s indexed.

You’ll often see:

- Crawled – not indexed

- Discovered – currently not indexed

- Or no mention of it at all in GSC

That’s where tools like IndexPlease help, not only by submitting, but by monitoring whether Google actually follows through.

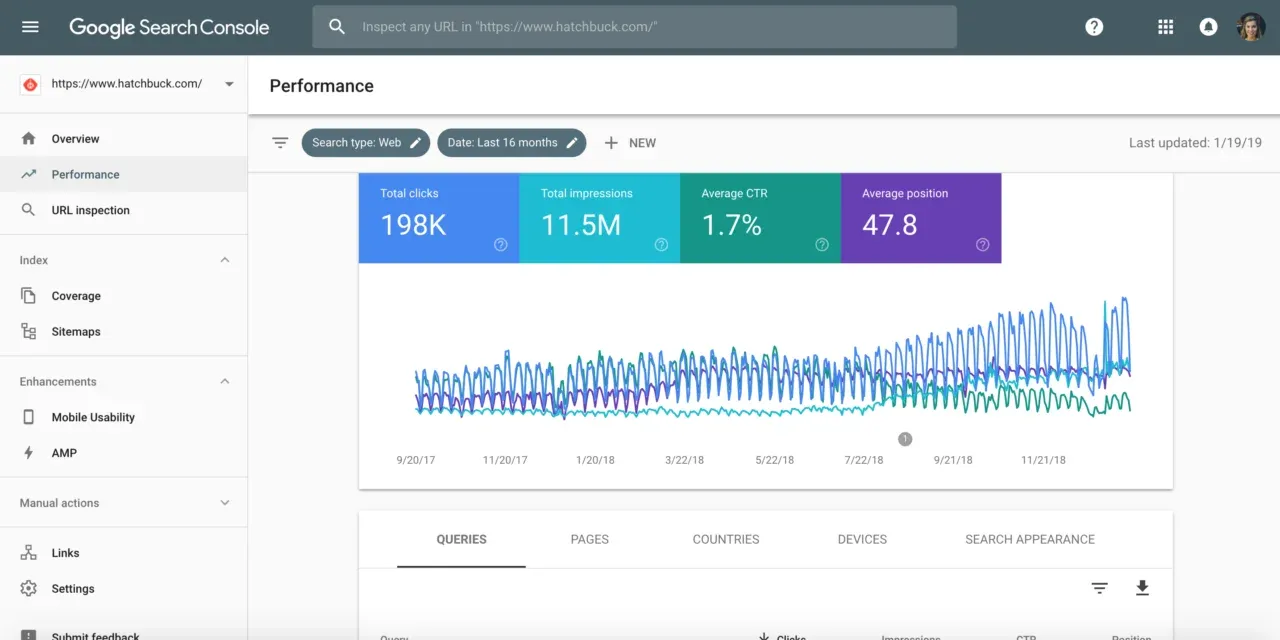

Manual Indexing Methods

Google doesn’t offer an API for indexing submissions anymore, so for many, the only free method left is manual. If you’re managing just a few important pages, here’s how to do it the old-school way.

Use the URL Inspection Tool

- Open Google Search Console

- Paste your page URL into the Inspect field (top bar)

- Google will check if the page is already indexed

- If not, click “Request Indexing”

It might take a few minutes to process. Note: You can hit daily limits (~10–15 submissions/day per property)

Resubmit Updated URLs

If you’ve significantly updated a page, new content, new structure, new title, re-inspecting and re-requesting indexing can speed things up.

Especially useful for:

- Blog updates

- New landing pages

- SEO refreshes

Submit or Resubmit Your Sitemap

Sometimes submitting your sitemap again nudges Google to recrawl missed URLs.

Steps:

- Navigate to Index → Sitemaps

- Enter or re-enter your sitemap URL (e.g.,

/sitemap.xml) - Monitor submission and indexing reports

The Limitations

Manual submission is slow. There’s no bulk option. No status tracking. And no way to retry failed pages at scale.

That’s where automation becomes a necessity and IndexPlease starts to shine.

Indexing at Scale: Why Manual Doesn’t Cut It Anymore

Sure, you can paste a few URLs into Google Search Console manually. But what if you manage:

- A blog that publishes 10+ articles/week?

- 1,000+ product pages that get updated monthly?

- A portfolio of 5–10 client websites?

Manual submission becomes a bottleneck real fast.

Real-World Problems with Manual Indexing:

- Daily Limits: Google restricts how many URLs you can request per day and doesn’t tell you exactly when you hit that wall.

- No Retry System: If a page doesn’t get indexed, you have to remember and resubmit it later (manually).

- No Bulk Insights: You have to inspect each URL individually. No overview of what’s indexed vs. not.

- Inconsistent Results: You might request indexing, but the page still sits in “Discovered – not indexed” weeks later.

Indexing = Workflow, Not a One-Time Task

Google’s index is dynamic. A page indexed today could be dropped next week due to:

- Changing signals

- Technical errors

- Core update recalibration

That’s why serious site owners need an automated indexing system that tracks, submits and retries, not just one-off fixes.

How IndexPlease Simplifies Google Indexing

Google Search Console is useful, but it’s not built for scale, automation or speed. If you’re serious about SEO, you need a tool that works for you, not one that makes you babysit indexing all day. That’s where IndexPlease comes in.

What IndexPlease Does (For Real):

Auto-Submit URLs (No Daily Cap)

Add your URLs, IndexPlease submits them automatically. No manual limits. No wait time.

Retry Unindexed Pages Until They Stick

Didn’t get indexed the first time? IndexPlease tries again in smart intervals, no reminders or spreadsheets needed.

Daily Monitoring

Track which URLs were:

- Crawled

- Indexed

- Still pending

- Failed (with reasons)

All in one dashboard.

Preview Metadata + Sitemap Health

See how Google will interpret your title, meta and open graph tags. Validate sitemap coverage.

Multi-Site Indexing

Manage multiple domains, ideal for agencies, publishers or SEO teams juggling multiple properties. Whether you’re pushing out fresh content daily or trying to recover ignored pages, IndexPlease saves hours and surfaces pages Google might otherwise miss.

Best Practices to Get Indexed Faster in 2025

Indexing isn’t guaranteed, even if your content is technically solid. But these best practices will give Google every reason to include your pages in its index quickly.

1. Submit an XML Sitemap

Always. And keep it updated. Include only important, canonical, indexable pages. Remove 404s, redirects and non-SEO URLs.

2. Link New Pages Internally

Don’t leave new content stranded. Link it from:

- Your homepage

- Sidebar widgets

- High-authority older posts

This helps Google discover them faster and assigns importance.

3. Use Canonical Tags Correctly

Set correct canonical URLs, especially for:

- Paginated content

- Product variants

- Duplicate paths

Incorrect canonicals can de-prioritize even your best content.

4. Improve Load Speed and Mobile UX

Google is still mobile-first. If your pages are slow, cluttered or broken on mobile, indexing can be delayed or dropped altogether.

5. Add Schema Markup

Use Article, Product, BreadcrumbList and FAQPage schema where relevant. This helps Google understand and prioritize your content.

6. Avoid Duplicate Pages and Crawl Traps

Clean up:

- Archive pages (

/tag/,/category/) - URL parameters

- Infinite scrolls or JS-based filters without proper rendering

7. Track Indexing Status Daily

Don’t rely on chance. Use tools like IndexPlease to:

- Monitor what’s actually indexed

- Flag indexing drops

- Auto-resubmit failed URLs

Doing all this consistently ensures that your content doesn’t just sit live, it actually gets discovered, crawled and indexed where it matters.

Final Thoughts

Google’s algorithmic filters, stricter crawl prioritization and ever-growing content saturation mean just publishing isn’t enough anymore. If a page isn’t indexed, it might as well not exist.

That’s why indexing has become a critical SEO workflow, not just a background process. Whether you’re a solo blogger or part of a growing marketing team, your goal isn’t just to create content, it’s to make sure it gets found.

And while Search Console gives you some visibility, it’s limited, manual and reactive.

Tools like IndexPlease flip the script. They:

- Push your content into Google faster

- Monitor indexing status daily

- Retry silently when Google ignores your URLs

- And simplify everything from sitemaps to page previews

Bottom line? The faster you’re indexed, the faster you can compete.

FAQs

1. Why isn’t my new blog post getting indexed by Google?

Likely reasons include: low internal links, crawl budget limits, duplicate content or incorrect canonical/noindex tags.

2. Is manual indexing still effective in 2025?

Yes, for a few high-priority pages, but it doesn’t scale and doesn’t guarantee indexing.

3. How long does it take for Google to index a page?

It can range from a few hours to several weeks, depending on your site’s authority, freshness signals and crawl frequency.

4. Does Google still use crawl budget in 2025?

Absolutely. Sites with poor internal linking, low authority or bloated URLs can have limited crawl frequency.

5. Why are some pages marked as “Crawled – not indexed”?

Google reviewed the content and chose not to index it. This could be due to low perceived value, duplication or thin content.

6. Does thin content still get excluded from Google’s index?

Yes. Pages with low word count, lack of originality or keyword stuffing often get ignored or deindexed.

7. Can IndexPlease help me fix sitemap or indexing errors?

Yes. It offers sitemap preview tools, crawl error logging and auto-resubmission for unindexed URLs.

8. How can I check if a page is indexed by Google?

Use site:yourdomain.com/page-url in Google Search or inspect the URL in Google Search Console.

9. Should I re-submit updated content for indexing?

Yes, especially after major edits. This helps trigger re-crawling and faster reflection of changes in SERPs.

10. How is IndexPlease different from Search Console?

Search Console is manual and passive. IndexPlease is automated, scalable and actively monitors + resubmits URLs across multiple domains.