How to get Indexed on Bing

Let’s be honest, most SEOs obsess over Google. But Bing quietly powers billions of searches each month across platforms you might not even think about: Microsoft Edge, ChatGPT (with browsing), Windows Search, DuckDuckGo and even Yahoo.

Ignoring Bing means leaving visibility, clicks and revenue on the table, especially when indexing isn’t guaranteed just because you hit “publish.”

Unlike Google, Bing can be surprisingly open to smaller sites, faster to index well-structured pages and less punishing with updates. But only if you play by its rules.

That’s where this guide comes in. We’ll break down exactly how Bing indexing works today, why it often fails silently and what steps you can take, from manual submissions to auto-indexing via tools like IndexPlease, to make sure your content actually gets seen.

Because ranking doesn’t matter if Bing never indexes you in the first place.

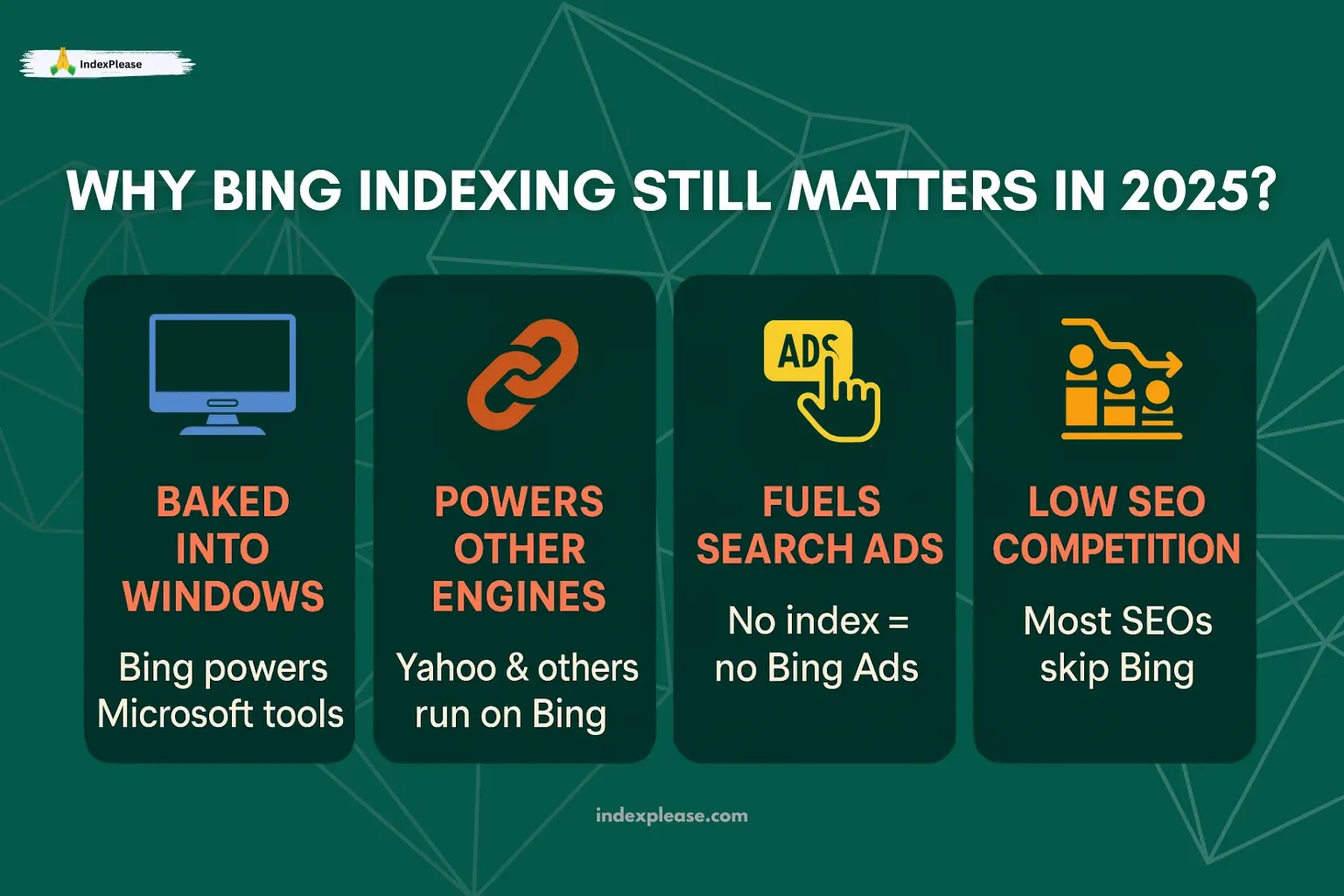

Why Bing Indexing Still Matters in 2025

Bing isn’t just “the other search engine.” It’s baked into everything Microsoft and that reach is deeper than you think:

- Microsoft Edge remains the default browser on hundreds of millions of PCs.

- Windows Copilot and ChatGPT with Bing use Bing’s search API to serve real-time web results.

- DuckDuckGo, Yahoo and other engines still rely on Bing’s infrastructure to deliver results.

- Bing Ads fuel shopping visibility across these channels, but none of it works if your site isn’t indexed.

And here’s the twist: Most SEOs still ignore Bing. That means less competition for your keywords, easier rankings and faster discovery, if your pages actually get picked up.

Bing is also evolving its own AI summarization and rich result capabilities. So structured data, schema and clean HTML aren’t just Google priorities anymore, they’re required to surface anywhere useful.

The result? Indexing on Bing is not optional, it’s a quick win for smart SEOs and lean teams.

How Bingbot Crawls and Indexes Your Site

Bing uses its own crawler, Bingbot, to discover, fetch and index pages across the web. While similar in purpose to Googlebot, Bingbot has a few key differences that make its behavior unique:

What Bingbot Prioritizes:

Sitemaps First Bing heavily leans on XML sitemaps and doesn’t do well with discovery via random backlinks or internal links alone.

robots.txt Compliance Bingbot respects crawl directives strictly, even more so than Googlebot. A misconfigured

Disallowcan silently block everything.Mobile-Ready, Fast Pages With Bing powering results on mobile devices and in AI assistants, speed and mobile-responsiveness are now essential.

Structured Content Bing rewards sites with clean, structured HTML and schema.org markup, especially for articles, products and local businesses.

Indexing Quirks to Watch:

Bingbot crawls less frequently than Googlebot for smaller or new sites. That means if you’re not actively submitting, you might not get indexed at all.

Canonical tags are strictly followed, even if incorrect. Bing won’t second-guess a bad canonical setup.

JavaScript-heavy pages (like infinite scroll or client-side rendering) often confuse Bingbot unless pre-rendered.

Bottom line? Bing favors sites that are clean, structured and upfront about what they want indexed. And if you’re not submitting your pages manually or using a tool like IndexPlease, there’s a good chance Bing never even sees your content.

Manual Submission Process

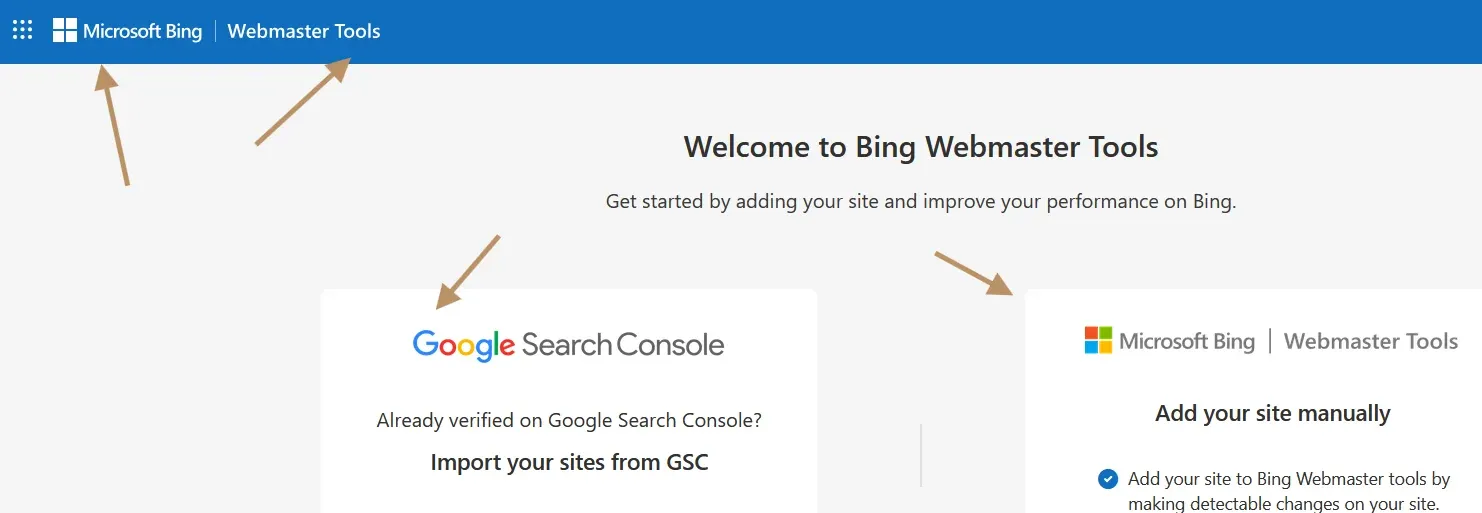

Alt text for image: ensure specific pages get indexed, Bing Webmaster Tools

If you’re managing a small-to-medium site and want to ensure specific pages get indexed, Bing Webmaster Tools (BWT) is your starting point.

Here’s how to submit your content the manual way:

Step-by-Step: Submit URLs to Bing

- Sign in to Bing Webmaster Tools.

- Add your site (if it isn’t added yet).

- Verify ownership via DNS, HTML tag or file upload.

- Navigate to “URL Submission” under the “Configure My Site” section.

- Paste up to 10,000 URLs per day (as of 2025) for indexing.

Yes, Bing gives generous submission limits, especially compared to Google. But that doesn’t guarantee your URLs will be indexed. It just means they’ll be crawled faster.

Submit Your Sitemap (Must-Do)

You should also submit your sitemap manually here:

- Go to the Sitemaps tab

- Submit your XML sitemap (e.g.,

/sitemap.xml) - Bing will re-crawl it periodically, but frequency is inconsistent, another reason to consider automation

Live URL Inspection

BWT also allows live URL inspection, where you can:

- See crawl status

- Identify indexing blocks (like noindex or canonical issues)

- View HTML as Bingbot sees it

This is critical if pages are stuck in “Discovered” limbo or showing up incorrectly in search. Manual submission works, but it’s tedious at scale. If you’re juggling hundreds or thousands of pages across multiple sites, you’ll need a better system..

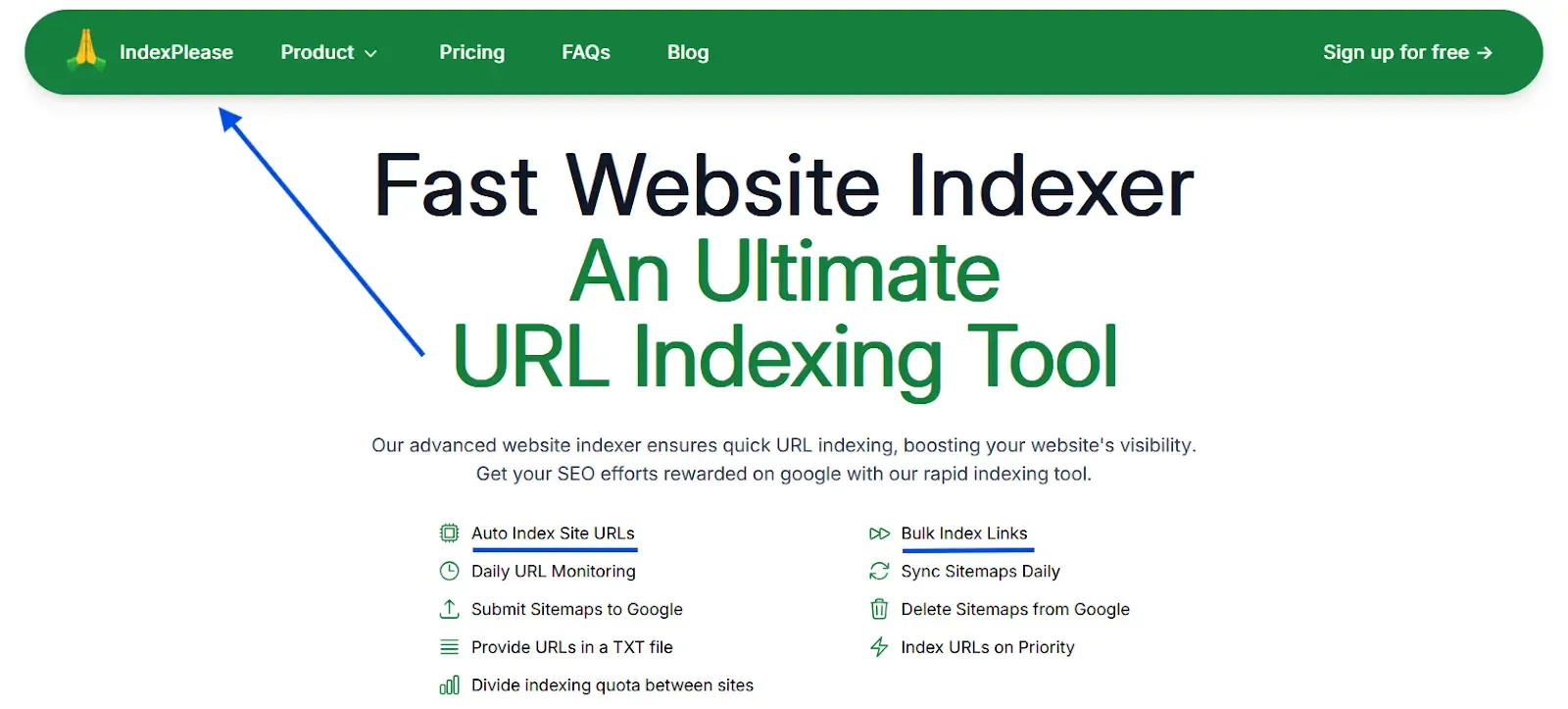

Bulk Submission & Auto Indexing with Tools

Manual submission is fine for a handful of URLs, but what if you’re running a content-heavy blog, managing clients or maintaining a site with dynamic pages that change daily?

That’s where bulk indexing tools come in.

The Problem with Doing It Manually:

- You’re limited by time, even if Bing allows 10,000 submissions/day, who’s pasting them one-by-one?

- Sitemaps aren’t enough if Bing skips your pages or refreshes slowly

- You can’t track which URLs failed, why or when they were last crawled

How Tools Like IndexPlease Help

IndexPlease offers a scalable solution designed for digital publishers, agencies and large sites:

Bulk Submit to Bing + Google Upload 100s or 1000s of URLs. IndexPlease sends them through API-integrated or supported channels.

Daily Monitoring See which URLs are indexed, pending or failed. No guesswork.

Unlimited URL Support No cap per day or month, all plans allow you to submit as many URLs as you need.

Multi-Site Support Perfect for agencies managing clients or site networks. Add multiple domains and handle indexing centrally.

In short, IndexPlease replaces hours of repetitive Bing Webmaster Tool work with one smart dashboard, helping your pages show up faster, more reliably and with zero manual stress.

Common Indexing Errors on Bing

Even after submitting URLs or sitemaps, your pages might still not appear in Bing Search. Here are the most frequent issues and how to avoid them:

1. “Discovered but Not Crawled”

- Bing sees the URL but hasn’t fetched the content yet.

- Fix: Improve internal linking to the page and resubmit it manually or through a tool like IndexPlease.

2. Blocked by robots.txt

- A forgotten

Disallow: /or rule targeting a folder (like/blog/) could stop Bingbot entirely. - Fix: Audit your

robots.txtfile and test it in Bing Webmaster Tools.

3. Noindex Tags in Templates

- CMS themes or plugin defaults might be applying noindex to archives or custom post types.

- Fix: Check global and per-page settings, especially if you use SEO plugins.

4. Canonical Confusion

- Bing obeys canonical tags strictly. If you canonicalize the wrong URL or another domain, your page won’t index.

- Fix: Review canonical logic. Make sure they match the primary version of your content.

5. Low Domain Activity = Crawl Budget Issues

Infrequently updated or new sites may get ignored for weeks.

Fix: Publish regularly, drive traffic and submit URLs directly to Bing.

6. Sitemap Errors

- Outdated or invalid sitemap formats may silently break your indexing pipeline.

- Fix: Ensure sitemaps are XML-compliant, updated and reachable from your robots.txt.

7. Missing Open Graph or Meta Info

- While not always a blocker, missing meta descriptions or OG tags may reduce priority in indexing queues.

- Fix: Fill out basic meta fields to help Bing understand your page context better.

Understanding these pitfalls is half the battle. In the next section, we’ll walk through best practices to ensure your content gets indexed faster and more reliably.

Best Practices to Ensure Fast Bing Indexing

Want your content to appear in Bing Search faster and stay there? Follow these proven techniques to stay on Bingbot’s good side.

1. Submit XML + HTML Sitemaps

- XML sitemap helps Bingbot discover structured URLs.

- HTML sitemap gives crawlable, internal links, especially for low-depth or orphaned pages.

Bonus: Link to your sitemap in robots.txt for auto-discovery:

Sitemap: https://yoursite.com/sitemap.xml

2. Keep Your Robots.txt Clean

- Avoid wildcard blocks (

Disallow: /*?) - Allow important sections like

/blog/,/product/or/services/ - Test with Bing Webmaster Tool’s robots.txt tester

3. Eliminate Crawl Traps

Bing still struggles with:

- Infinite scroll / AJAX pagination

- Tag or category archives with low value

- Calendar links or duplicate filter-based URLs

Use canonical tags and noindex where needed or block in robots.txt if safe.

4. Use Schema Markup

Structured data helps Bing:

- Understand page purpose

- Qualify for enhanced listings

- Prioritize indexing for content like articles, products, FAQs

Start with:

Article,BreadcrumbList,Product,LocalBusinessValidate with Schema.org Validator

5. Strengthen Internal Linking

- Link to new pages from high-authority existing ones

- Avoid burying content too deep (e.g.,

/blog/2025/06/22/keyword/)

More internal signals = more crawl priority.

6. Use Tools for Daily Monitoring

If you’re managing scale, manually checking Bing just doesn’t cut it. Tools like IndexPlease handle:

- Daily crawl status checks

- URL retries

- Submission logs

- Index verification

Apply these practices consistently and you’ll notice faster indexing, better visibility and less guesswork.

Final Thoughts

Bing might not dominate the headlines, it quietly powers some of the most underrated SEO opportunities on the web. From Windows Copilot and ChatGPT integrations to powering third-party search engines, Bing isn’t something SEOs can afford to ignore anymore. But indexing isn’t automatic.

If your pages aren’t getting picked up or worse, you’re not even checking, then you’re missing out on entire traffic pipelines. Bing rewards sites that are structured, crawlable and proactive. And tools like IndexPlease take the manual grind out of the equation by automating submissions, retries and monitoring at scale.

So whether you’re running a small blog or a sprawling eCommerce empire, now’s the time to make Bing indexing part of your SEO workflow.

FAQs

1. Is Bing indexing slower than Google in 2025?

Yes, especially for new or inactive sites. Bing crawls less frequently unless pages are submitted or linked from strong domains.

2. How often should I resubmit my URLs to Bing?

If a page isn’t indexed within a week, resubmit. Tools like IndexPlease automate retries, saving manual effort.

3. What’s the daily URL submission limit on Bing Webmaster Tools?

Bing currently allows up to 10,000 URL submissions per day per site, generous, but still manual unless you automate.

4. Why are some URLs stuck in “Discovered” status?

Bing sees the link but hasn’t crawled it. Possible reasons: weak internal linking, crawl budget limits or canonical conflicts.

5. Does Bing index AI-generated content?

Yes, but it favors clear, structured, human-readable pages. Overly spun or low-value AI text may get deprioritized.

6. Can IndexPlease help me get indexed on Bing?

Absolutely. It handles bulk submissions, auto-retries and monitoring, all Bing-compatible.

7. What if Bingbot isn’t crawling my site at all?

Check your robots.txt, verify in Bing Webmaster Tools and manually submit your homepage + sitemap.

8. Does Bing respect canonical tags the same way as Google?

Even more strictly. If your canonical tag points elsewhere, Bing might not index your actual page.

9. Is Bing indexing tied to ChatGPT and AI tools?

Yes. Bing Search results often surface in ChatGPT (with browsing), Windows Copilot and even Edge sidebar AI.

10. Should I even focus on Bing if most of my traffic is from Google?

Yes, Bing often has lower competition and ranking is easier for smaller sites. Plus, Bing traffic converts surprisingly well.