It can be quite beneficial trying to find the optimal setting for search engine indexing and crawling your site. Yet, so many webmasters fail to grasp the concept of Noindex and how this sets itself apart from Disallow in robots.txt. As always, Google has shed some light on these concepts, and knowing how to use them can help enhance your site’s visibility.

This blog is written to help in clarifing the concepts surrounding blocking pages from being crawled and indexed, so if you have ever found yourself confused on the topic rest assured.

Robots.txt and Its Significance to SEO

The robots.txt file does not allow any search engines indexing your website without authorization. In other words, it instructs the bot about which sections of the website need to be accessed. However, it primarily does not serve to streamline the prospect of being indexed or not, and this is where no-index along with Disallow come in.

In the Case Search Engines Read Your Robots.txt File

When a bot for a search engine crawls your site, it uses the robots.txt file as the required resource to scope out potential no-go folders or pages. But Google has made it very clear that while crawling, a Disallow rule only prevents sites from being crawled. This means that as long as the sources linking to the blocked page exist, the blocked can and will appear because it is linked to another page on the search results.

Retrieving Mistakes In Robots.txt

It is a common misconception among many site administrators that employing Disallow in robots.txt will take pages out of the search results entirely. Sadly, this is not always the case. Disallow does not guarantee de-indexing, as pointed out by John Mueller of Google. For that, you need no-index.

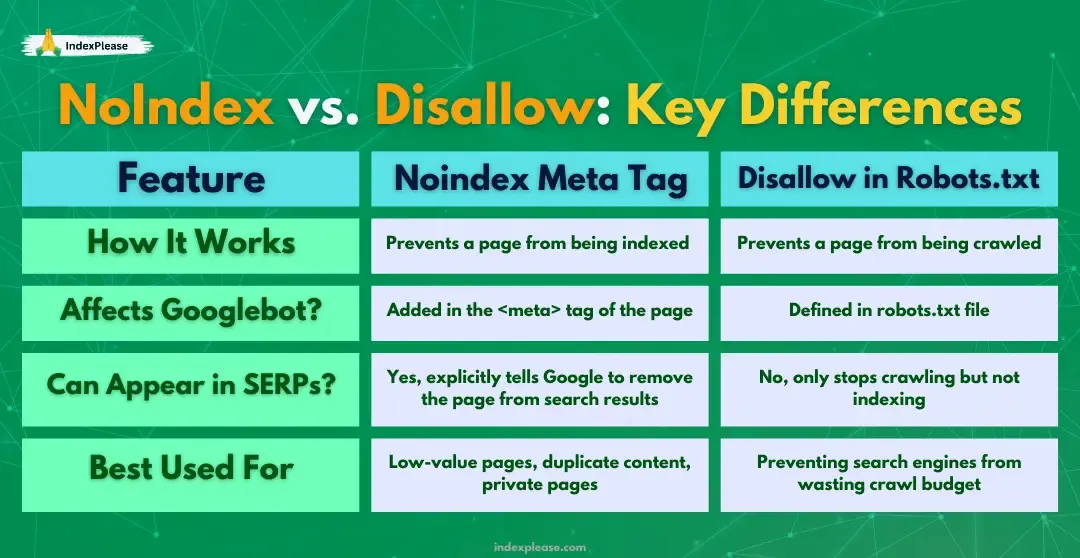

NoIndex vs. Disallow: Key Differences

Both no-index and Disallow help manage search engine visibility, but they serve different purposes.

When to Use Noindex

- Pages with sensitive or duplicate content that should not appear in search results

- Thank-you pages, login pages, or temporary landing pages

- Search result pages within a website that don’t add value to search engines

- Archive or outdated pages that should be phased out

To implement no-index, add this meta tag into the section of your HTML:

This tag prevents Google from indexing the page while still allowing it to follow the links within the page.

When to Use Disallow

- Pages that consume crawl budget but do not need to be indexed

- Admin sections, staging environments, and private directories

- Large media files, like PDFs, that should not be crawled

- Filtered category pages that create duplicate content

To block crawlers, add this directive to robots.txt:

This tells search engine bots not to crawl /private-page/. However, if someone links to it, Google may still index it.

What Google Recommends for SEO

Google recommends using noindex when you want to remove pages from search results entirely. If you only want to stop Google from crawling a page but don’t mind it being indexed, Disallow is sufficient.

Why Noindex is More Reliable Than Disallow

John Mueller has flagged that pages can still be indexed by Google even if they are linked somewhere else, which is not a very trustworthy approach to depend only on Disallow. The sure short way of ensuring that a page doesn’t exist in John Mueller’s eyes is to rely upon noindex.

This illustrates why depending on Disallow alone can be dangerous. Rather than adjusting the robots.txt files and meta tags by hand, IndexPlease allows webmasters to take charge of their indexing procedures. With real-time indexing capabilities, it guarantees important pages get indexed and unwanted pages are kept out of search results. This eliminates the time wasted and the guesswork associated with SEO.

Important Note: Google no longer supports noindex directives inside robots.txt. If you previously used noindex in robots.txt, switch to meta tags or HTTP headers. This will, however, not help with direct links.

Effectively managing indexing requires the optimization of crawl budgets alongside correct noindex tag application. However, ad-hoc updating of the robots.txt files and the manual insertion of the noindex tags is extremely mundane. Because of this, IndexPlease makes this problem obsolete by helping businesses implement an effortless noindex tagging strategy. Whether instant indexing is required for new content or search engines need to be blocked from irrelevant pages, this tool makes sure that the SEO strategy remains intact.

Best Practices for Managing Indexing

To optimize your SEO strategy, follow these best practices when using noindex and Disallow:

- For pages that should not appear in search results, always use noindex.

- Use Disallow to control crawl budget and prevent unnecessary crawling.

- Combine Disallow with noindex when you want to prevent both crawling and indexing.

- Try not to block critical resources such as CSS and JavaScript in robots.txt as it might have consequences on page rendering.

- Remember to audit your robots.txt file along with noindex utilization periodically for efficiency in SEO.

Conclusion

Knowing and comprehending the distinction between noindex and Disallow is important on how you aim to optimize your website index visibility on the search engines. Disallowed pages will not be crawled but this does not mean that these pages will not be shown in the search results. noindex on the flip side removes a page from the index, therefore, being much more useful in removing content from the index.

For Business and website owners that do not want to deal with such complexities in managing the index, tools like IndexPlease are a step ahead in offering such solutions. Rather than editing and manipulating a robots.txt file, or placing meta tags on an unlimited number of pages, IndexPlease takes the workout of the process and makes sure your content is indexed while the non-essential pages are blanked onto the search results.

With a careful combination of automation on noindex, Disallow, and indexing, it is possible to optimize the website’s SEO, increase crawlability, and take complete control over the search visibility. Audits and having best practices will put you ahead of competition in the ever-changing world of Search Engine Optimization.

FAQs

Does using Disallow command remove a page from Google search results?

No. If any page was previously indexed using Disallow will not delete it, instead will block it from crawling. You need a noindex command in order to delete it.

How long does it take Google to remove a noindexed page?

It depends on how frequently Google crawls, but usually within a few weeks. You can expedite the process with Google Search Console using the ‘Remove URLs’ option.

Does robots.txt help in SEO?

Not all the time. It does assist search engine robots, but if used in the wrong way, it can do more harm than good. Manual indexing management is a heavy burden to bear, so explode your competition with IndexPlease. This tool helps automate indexing, assuring that vital pages are indexed while unnecessary ones are hidden from search results. Rather, It’s easier to just set robots.txt and noindex tags to almost every page and shred your SEO performance.

What do you think would happen when an entire directory is blocked in robots.txt?

The directory will not be crawled by search engines, however if links to the pages are fetched, it’s possible for them to show in search results.

Is noindex necessary for paginated content?

No. Google suggests using proper pagination such as rel=.”next” or rel=.prev” instead of no index to avert any SEO complications.